On-board HMI is gradually upgrading its technology, triggered by the evolution of ADAS/AD driving and the increasing usage of smartphones and the apps in vehicles. A system that switches over the mode of communications via HMI into the most optimal one according to a surrounding situation is being developed. This system tracts driver’s line of sight and behavior, and in the future, such phycological aspects as fatigue and stress level in driving will also become measurable. Information recognized by safety-related sensors and V2I located hundreds of meters away from a vehicle is conveyed to drivers by HUD or voice and drivers are informed about the status of between hundreds of meters and a couple of kilometers away through a car navigation system, CID, and/or voice.

What’s more, the system is being integrated cloud with deep learning of the HMI results and identifies the most optimal HMI pattern for a specific driver in order to provide personalized services via OTA or other services. This systematic behavior is being implemented from the voice-based solution; and down the road, it will be applied to HUD, CID and so forth. As this evolution makes progress, on-board HMI is estimated to become an autonomous controlling system as an integrated cockpit, which is exactly the same trend that the safety domain follows.

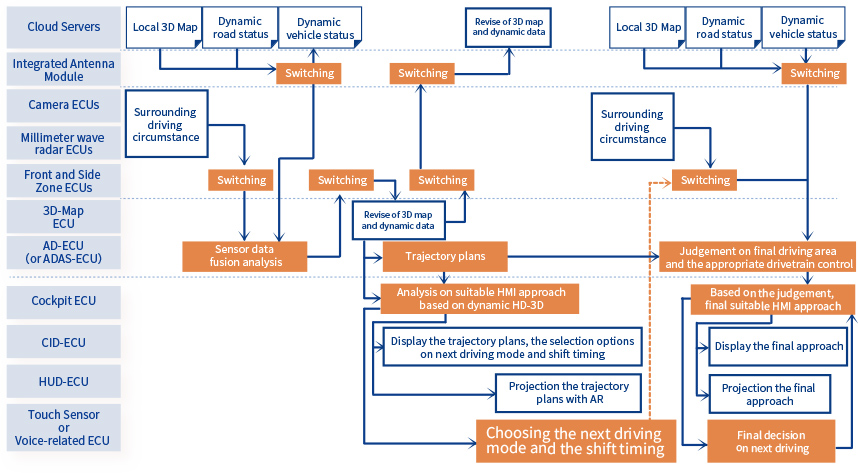

Data transmission process between ADAS/AD domain and HMI sector

Next generation HMI and cockpit system will be coordinated with cloud, and enhance to the autonomous control handling in harmony with OTA.

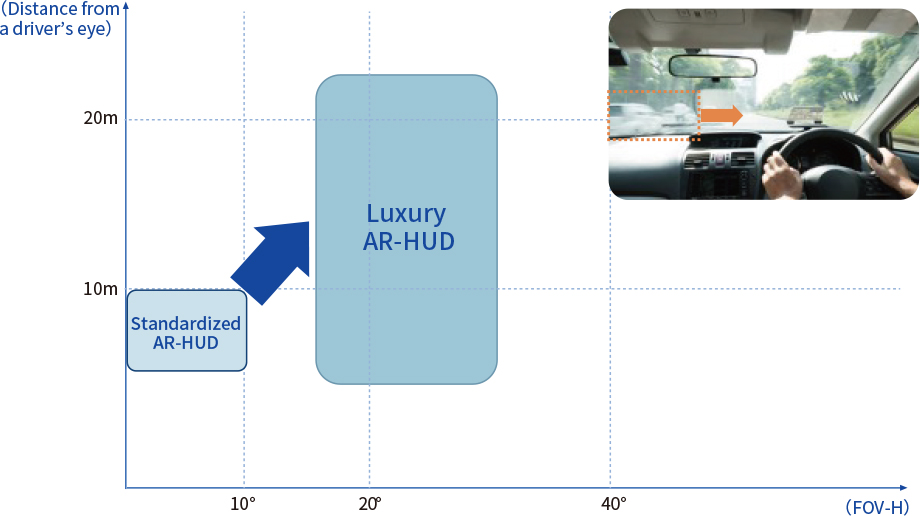

In this evolution path, CID size being enlarged and light source and optical method are changing and HUD is especially enhancing its functionalities. HUD will keep using LED as light source and liquid crystal as a default optical method, the development of HUD type which adjusts optics with DLP is becoming active these days, thanks to the superb color reproducibility of DLP, while the displayed information is becoming more AR-based. In conjunction with this AR transformation, it is likely that a display of 70 inches or larger locating a driver’s line of sight of 10 meters away is to be increasingly commercialized, and the integration of systems is making progress, following the trend of enhancing capabilities of automated driving sensing; e.g., an irradiation distance to be extended up to 20 meters and an FoV (both horizonal and vertical) to be widened more than double.

Display of the distance and the FOV with the next-generation AR-HUD

Compared to AR-HUD in 2020 and 2021, the next-generation AR-HUD will be equipped with 10m-20m of the display distance and 10°-20°of the horizontal FOV. Longer distance and larger size enable OEMs to seamlessly harmonize between an appropriate HMI and wider-range driving circumstance detected by the ADAS sensors and V2I sector.

We have been providing the best action plans to help your solution development steps more adaptive to ever-changing technical trends, and navigates automotive OEMs, tier1s and tier2s to the most appropriate direction through our continuous analyses on the latest trends in the following segments across the globe, including such markets as Europe, North America and China:

- On-board HMI—particularly of its systems integrated with cloud, ADAS/AD and driver’s behavioral sensing technologies

- Devices—including car navigation systems, CID, and HUD

- Software—AR and on-board OS